Lidar Camera sensor can improves photography and augmented reality experiences by capturing depth. It is currently available on the iPhone 12 Pro and iPhone 13 Pro, as well as Apple’s iPad Pro models, but it may soon appear on mixed-reality headsets and other devices.

It is clear from Apple’s devices that they are invested in this technology. This dot is a lidar camera sensor, and it introduces a novel way of sensing depth that can enhance photography, augmented reality, 3D scanning, and much more.

Lidar with camera is rapidly gaining traction, and Apple wants to ensure it stays that way. It has already become a crucial component of AR headsets and automobile applications. Is it necessary? It might not be. We’ll explore what we know about it, how Apple utilises it, and what the future holds for this technology.

We can enhance the overall user experience with lidar sensors in various applications by enhancing how we perceive depth. We will likely see it integrated into other devices soon, especially if Apple continues to lead the charge.

How does a lidar camera work to sense depth?

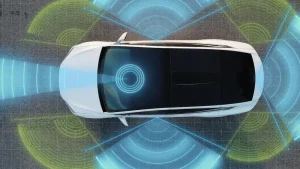

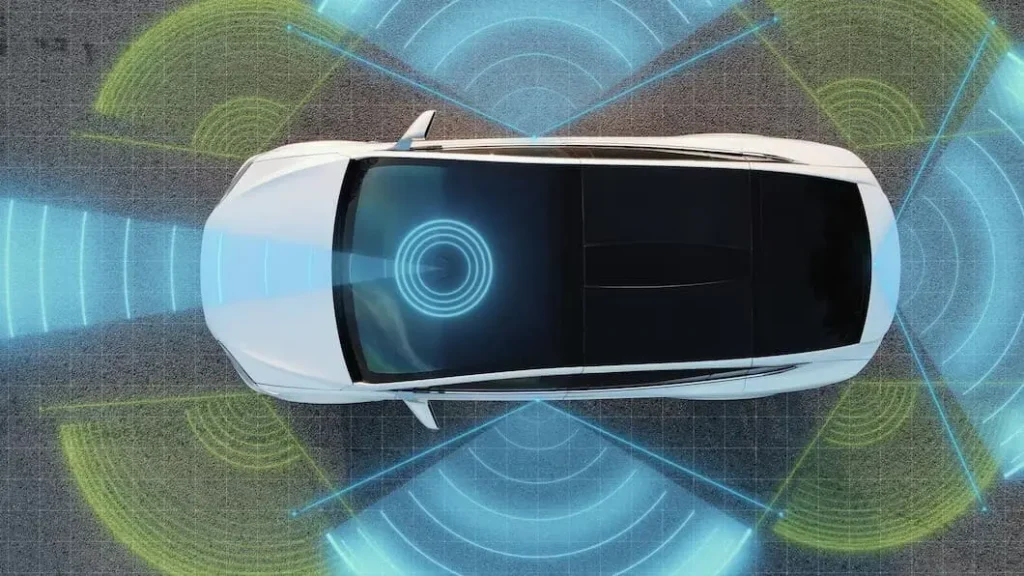

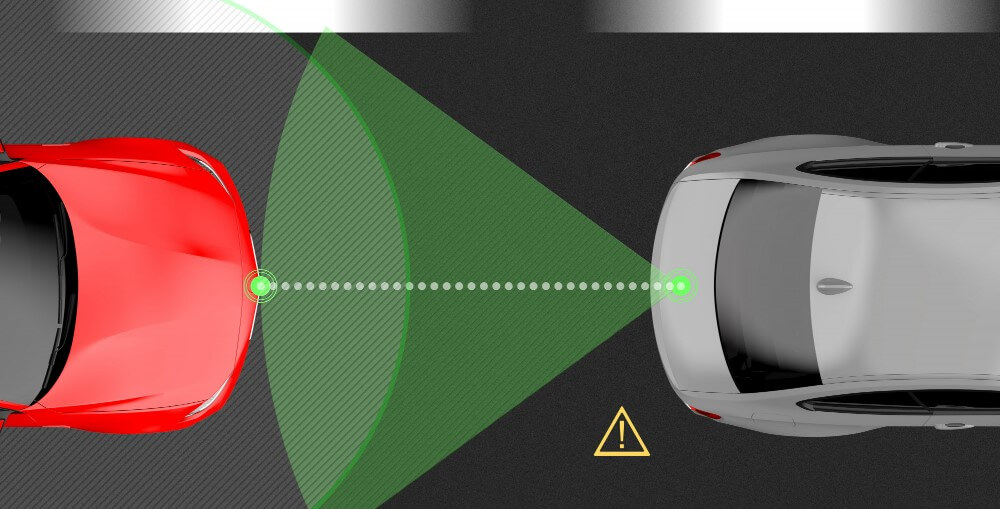

Using Lidar technology, you can sense depth with a time-of-flight camera. A lidar phone sends out waves of light pulses as infrared dots measured by its sensor, unlike other smartphones that use a single light pulse. This way, you can map out distances and mesh dimensions in space, including objects.

While humans can’t see these light pulses, night vision cameras can. A lidar camera sensor uses this advanced method to quickly and accurately determine an environment’s depth.

Doesn’t this work like Face ID?

Yes, lidar technology is similar to the TrueDepth camera on the iPhone that enables Face ID. Lidar sensors, however, have a longer range. TrueDepth cameras use infrared lasers to project images, but their range is only a few feet. On the other hand, the lidar sensors on the iPad Pro and iPhone 12 Pro have a greater range of up to 5 meters, allowing them to sense depth further away.

Many other technologies use Lidar already.

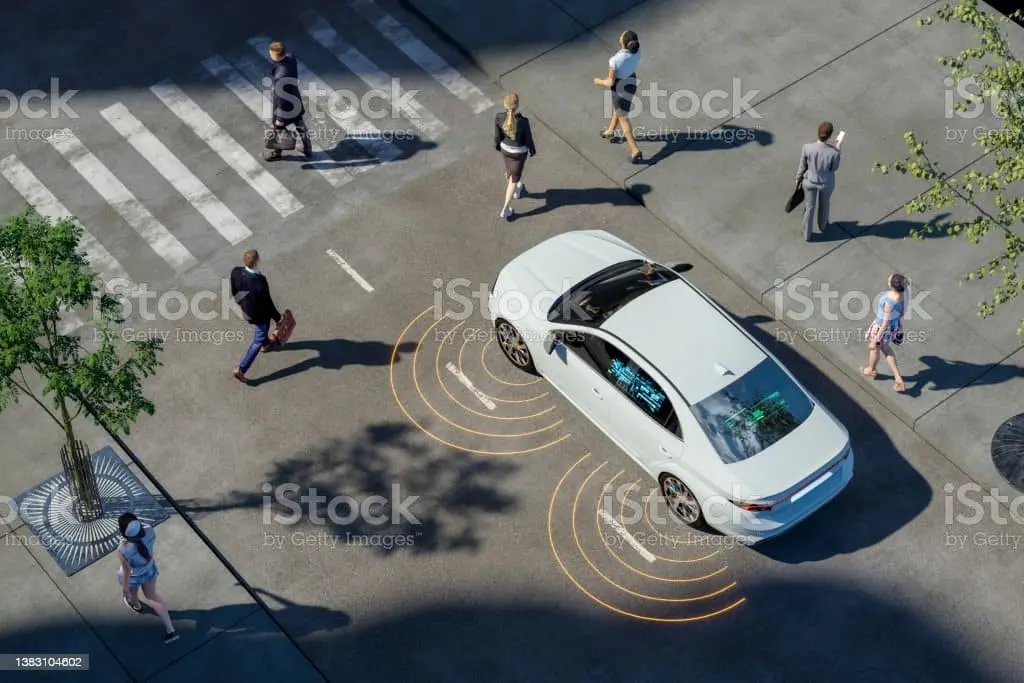

In recent years, laser technology has become increasingly ubiquitous and has been integrated into a wide range of devices. The technology is commonly used in self-driving cars, drones, and robotics. HoloLens 2 uses similar technology to map out space and layer in 3D virtual objects. Lidar camera technology is even in VR headsets.

It’s a relatively new technology, but it’s been around for a while. One of the first products to use infrared depth-scanning was Microsoft’s Kinect depth-sensing accessory for the Xbox. Apple took over PrimeSense, the company that developed Kinect technology, in 2013. This purchase ultimately contributed to the development of Apple’s TrueDepth and rear lidar camera sensors.

Lidar works better with iPhone 12 Pro and 13 Pro cameras.

Lidar technology has significantly enhanced the performance of the iPhone 12 Pro and iPhone 13 Pro cameras. It’s common for smartphone time-of-flight cameras to improve focus accuracy and speed, and the iPhone 12 Pro uses it too. Apple says it’s six times faster in low-light conditions and has better low-light focus. As well, lidar depth-sensing enhances night portrait mode. Our review of the iPhone 12 Pro Max showed that Lidar made a difference. There’s similar lidar technology on the iPhone 13 Pro, but the camera’s better.

With the iPhone 12 Pro’s lidar camera technology, you can get more 3D photos. However, app developers are already similarly using the TrueDepth camera with depth-sensing capabilities. Some innovative and exciting applications could emerge with third-party developers having access to this technology. Some developers are already exploring it.

Read Also: Unlocking the Power of Laser Range Finder UK

A great deal of enhancement is also provided by augmented reality.

A Lidar camera sensor enhances the augmented reality (AR) capabilities of iPhones and iPads. It uses Lidar to create occlusion, which hides virtual objects behind real ones. You can load AR apps much faster and see a detailed room map. You can also use Lidar to place virtual objects in more complex room maps, like on a table or chair.

For example, Hot Lava on Apple Arcade uses Lidar to scan a room and its obstacles, letting you place virtual objects on stairs and hide them behind real-life stuff. Our AR experiences will get even richer as more developers add lidar support.

Indeed, the lidar technology in the iPhone 12 and 13 Pro and iPad Pro could help develop more advanced AR and VR headsets. These devices can create mixed-reality experiences that seamlessly blend virtual and real things. You can also crowdsource 3D lidar mapping data, opening up even more possibilities for creating more accurate virtual environments.

Other phones that use technology like this

That’s correct. Tango was Google’s early attempt at 3D mapping and augmented reality on smartphones, equipped with a depth-sensing camera array. Sadly, Tango didn’t make it to more than a few devices, while Apple’s lidar camera technology is already in multiple products and used for more than just augmented reality.

Bottom Line

In conclusion, the Lidar Camecra is undoubtedly one of the coolest and most innovative features of the latest iPhone and iPad Pro models. With this advanced camera, you can create accurate and detailed maps, models, and augmented reality experiences. Lidar allows users to capture more detailed and realistic images and videos, and developers can create new and exciting AR applications. In summary, the Lidar camera enhances the capabilities of the iPhone and iPad Pro and opens up new possibilities for creative expression and immersive experiences.